Docker is an open-source platform that enables developers to develop, package, and deploy applications in containers. It provides tools and a platform for building, shipping, and running containers across different environments consistently, whether it's a developer's laptop, a testing environment, or a production server. Docker enables you to separate your applications from your infrastructure so you can deliver software quickly. With Docker, you can manage your infrastructure in the same ways you manage your applications. By taking advantage of Docker's methodologies for shipping, testing, and deploying code, you can significantly reduce the delay between writing code and running it in production.

Breaking down to how Docker works?

Docker Engine: At the core of Docker is the Docker Engine, which is responsible for building, running, and managing containers. The Docker Engine consists of several components, including:

Docker Daemon: The Docker daemon (dockerd) is a background service that runs on the host machine. It listens for Docker API requests and manages container lifecycle, image management, networking, storage, and other Docker-related tasks.

Docker CLI: The Docker Command-Line Interface (CLI) (docker) is a client tool used to interact with the Docker daemon via the Docker Remote API. It allows users to build, run, manage, and monitor Docker containers and images using simple commands.

Container: Containers is an isolated environment for applications to run, regardless of the underlying infrastructure or operating system. It is isolated, portable, lightweight, scalable, and consistent. It includes everything needed to run a piece of software, including the code, runtime, system tools, libraries, and settings.

Containers vs Virtual Machine

Virtualization: It is a process whereby software is used to create an abstraction layer over computer hardware that allows the hardware elements of a single computer to be divided into multiple virtual computers. The software used is called a hypervisor. It is a small layer that enables multiple operating systems to run alongside each other, sharing the same physical computing resources. When a hypervisor is used on a physical computer or server, it allows the physical computer to separate its operating system and applications from its hardware. Then, it can divide itself into several independent “virtual machines”.

Virtual Machines: Virtual machines (VMs) are a technology for building virtualized computing environments. A virtual machine is an emulation of a physical computer. VMs enable teams to run what appear to be multiple machines, with multiple operating systems, on a single computer. VMs interact with physical computers by using lightweight software layers called hypervisors. Hypervisors can separate VMs from one another and allocate processors, memory, and storage among them.

Containers: Containers run on top of a single host operating system and share the host's kernel. They are isolated at the process level, meaning each container has its own filesystem, network, and process space. Containers provide process-level isolation, meaning each container has its own filesystem, network, and process space. Containers are highly portable across different environments because they encapsulate all dependencies and run-on top of the host operating system kernel. They can be easily moved between development machines, testing environments, and production servers. They are ephemeral in nature, meaning when they die data inside the container are not accessible.

Set of Terminologies used in Docker

Dockerfile: A text file that contains instructions for building a Docker image. It specifies the base image, dependencies, environment variables, and commands needed to set up the application environment within the container.

Docker Image: A lightweight, standalone, and executable package that contains the application code and all its dependencies, as defined by the Dockerfile. Images are used to create containers.

Docker Container: A running instance of a Docker image. Containers are isolated environments that run on the host machine's operating system kernel but have their own filesystem, network, and process space. They can be started, stopped, paused, and deleted.

Docker Registry: A repository for storing and sharing Docker images. Docker Hub is the official public registry maintained by Docker.

Docker Engine: The core component of Docker that enables the creation and management of containers. It includes a daemon process (dockerd) that runs in the background and a command-line interface (CLI) tool for interacting with the Docker daemon.

Docker Compose: A tool for defining and running multi-container Docker applications. It uses a YAML file (docker-compose.yml) to configure the services, networks, and volumes required by the application and provides commands for managing the application lifecycle.

Docker Volume: A persistent data storage mechanism used by Docker containers. Volumes can be mounted into containers to store and share data between containers or between the host and containers.

Docker Network: A virtual network that allows communication between Docker containers running on the same host or across different hosts. Docker provides default network drivers for different use cases, such as bridge, overlay, and host.

Docker Swarm: Docker's native clustering and orchestration tool for managing a cluster of Docker hosts. It allows users to deploy, scale, and manage multi-container applications across a cluster of machines.

Docker Stack: A collection of services defined by a Docker Compose file and deployed as a single unit in a Docker Swarm cluster. Docker Stacks simplify the deployment and management of complex applications composed of multiple interconnected services.

# Use the official NGINX image as the base image

FROM nginx

#This line specifies the base image for the Dockerfile,

#which in this case is the official NGINX image available from the Docker Hub registry.

#It provides the necessary NGINX runtime environment to run the web server.

# (Optional) Expose port 80 to allow external access

EXPOSE 80

#The EXPOSE instruction is used to expose port 80 within the container.

#This makes port 80 accessible to other containers or the host machine

# (Optional) Set a default command to run NGINX when the container starts

CMD ["nginx", "-g", "daemon off;"]

#The CMD instruction specifies the default command to run when the container starts. In this case,

#it starts NGINX with the specified options (-g "daemon off;").

#This command ensures that NGINX runs in the foreground and doesn't daemonize,

#allowing Docker to manage the container's lifecycle effectively.

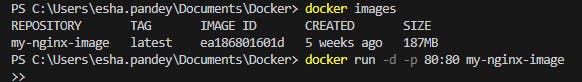

$ docker build -t my-nginx-image

Once the image is built, you can run a container based on this image using the command: docker run -d -p 80:80 my-nginx-image

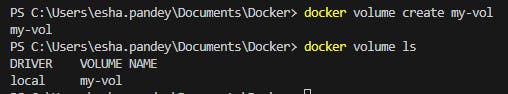

Docker Volumes: Docker volumes are a mechanism for persisting and managing data generated by Docker containers. They provide a way to store and share data between containers and between containers and the host machine. Here's an explanation of Docker volumes and their key features:

Data Persistence: Docker volumes enable data persistence for containers. Unlike container filesystems, which are ephemeral and deleted when a container is removed, volumes persist even after a container is stopped or deleted. This makes volumes suitable for storing data that needs to survive container lifecycle changes.

Storage Location: Volumes are stored in a directory on the host machine's filesystem, outside of the Union File System used by containers. By default, Docker manages the location of volumes, but you can also specify a custom location when creating volumes.

Docker compose: Docker Compose is a tool provided by Docker that simplifies the process of defining and running multi-container Docker applications. It allows you to define your application's services, networks, and volumes in a single YAML file called docker-compose.yml, and then use a single command to start, stop, and manage your entire application stack.

---

version: '3'

services:

frontend:

build: ./client/

restart: always

ports:

- 3000:80

backend:

build: ./server/

restart: always

ports:

- 3001:3001

mysql:

image: mysql/mysql-server:5.7

command: --init-file /data/application/init.sql

restart: always

environment:

MYSQL_DATABASE: employeeSystem

MYSQL_ROOT_PASSWORD: mauFJcuf5dhRMQrjj

MYSQL_ROOT_HOST: '%'

ports:

- 3306:3306

volumes:

- ./init.sql:/data/application/init.sql

- mysql_server_data:/var/lib/mysql

volumes:

mysql_server_data: